10 Mai 2017

Vous désirez apprendre et/ou apprivoiser un langage de programmation, un système d’exploitation, une base de données, un shell, etc ? Vous y songez sérieusement mais vous craignez d’avoir à installer une tonne de trucs sur votre ordinateur pour satisfaire votre curiosité? Vous y songez mais, malheureusement, ce que vous désirez n’est pas disponible pour votre système d’exploitation? Vous y songez mais l’installation de tout ce qu’il vous faudrait vous semble compliquée?

Ne cherchez plus! TutorialsPoint.com vous offre l’environnement en ligne CodingGround ! Tous les environnements en ligne ainsi que les tutoriels en ligne sont gratuits en plus!

Qu’est-ce que CodingGround ? C’est un environnement de développement minimal en ligne vous permettant d’éditer, de compiler et d’exécuter du code. C’est une machine virtuelle qui vous offre aussi un shell Linux et un browser (pour tester votre PHP, HTML ou CCS par exemple). Pour les environnements qui le supportent, il est également possible de changer les options de compilation. On peut aussi ouvrir simultanément plusieurs fichiers source, scripts, shells, browsers, etc.

CodingGround vous offre en plus de sauvergarder, importer, exporter, partager et lire tous vos projets avec GitHub, DropBox, OneDrive et GoogleDrive! Vous pouvez aussi simplement garder tous vos projets sur votre ordinateur!

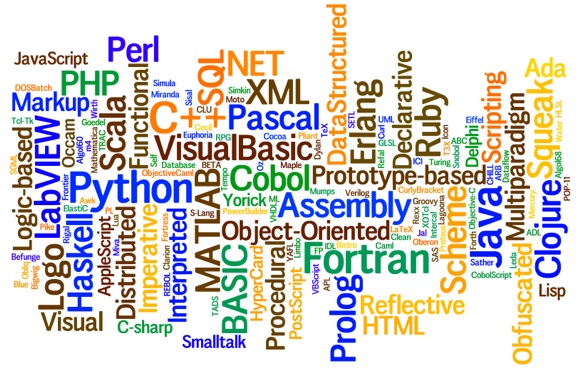

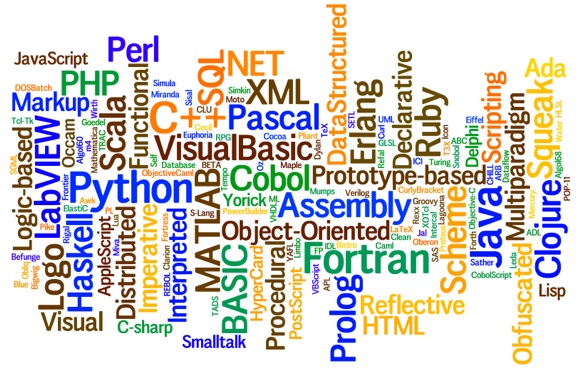

Quels sont les langage de programmation, les systèmes d’exploitation, les bases de données, les outils de développements que CodingGround offre? Une tonne!

Liste des terminaux (shell) en ligne

Liste des IDE en ligne

Leave a Comment » |

Leave a Comment » |  bases de données, database, informatique, langage de programmation, programmation, programming, Système d'exploitation, tutoriel | Tagué: Ada, Algol-68, Angular JS, AsciiDoc, Assembly, Awk, base de données, Bash Shell, Befunge, bootstrap, Brainfuck, Browser, C, C++ 0x, C++ 11, C99 Strict, CentOS, Chipmunk BASIC, Clojure, COBOL, Coding Ground, CodingGround, CoffeeScript, ColdFusion, compilateur, compilation, compiler, CSS-3, D Language, Dart, développement, DropBox, Elixir, Embedded C, Erlang, F, Factor, Falcon, Fantom, Forth, Fortran-95, Free Basic, fureteur, GitHub, Go!, GoogleDrive, Groovy, Haskell, Haxe, HTML, Icon, IDE, ilasm, Intercal, interpréteur, interpreter, Java, Java 8, Java MySQL, JavaScript, jQuery, JSP, Julia, Ksh Shell, langage de programmation, Latex, Linux, Lisp, LOLCODE, Lua, machine virtuelle, Malbolge, Markdown, MathML, Matlab, Memcached, Mongo DB, Mozart-OZ, MySQL, Nimrod, Node.js, Numpy, Objective-C, OCaml, Octave, OneDrive, Oracle 11g, OS, P5.js, PARI/GP, Pascal, Pawn, Perl, Perl MySQL, PHP, PHP MySQL, PHP Web View, Pike, PowerShell, Processing.js, programmation, Prolog, Python, Python MySQL, Python-3, R Programming, Redis, reStructure, Rexx, Ruby, Rust, Scala, Scheme, Scipy, script, shell, Simula, Smalltalk, SML/NJ, SQLite, Sympy, système d'exploitation, Tcl, terminal, terminaux, Tex, Tutorials Point, TutorialsPoint, Unlambda, VB.Net, Verilog, VM, Whitespace, Ya Basic, Yasm |

bases de données, database, informatique, langage de programmation, programmation, programming, Système d'exploitation, tutoriel | Tagué: Ada, Algol-68, Angular JS, AsciiDoc, Assembly, Awk, base de données, Bash Shell, Befunge, bootstrap, Brainfuck, Browser, C, C++ 0x, C++ 11, C99 Strict, CentOS, Chipmunk BASIC, Clojure, COBOL, Coding Ground, CodingGround, CoffeeScript, ColdFusion, compilateur, compilation, compiler, CSS-3, D Language, Dart, développement, DropBox, Elixir, Embedded C, Erlang, F, Factor, Falcon, Fantom, Forth, Fortran-95, Free Basic, fureteur, GitHub, Go!, GoogleDrive, Groovy, Haskell, Haxe, HTML, Icon, IDE, ilasm, Intercal, interpréteur, interpreter, Java, Java 8, Java MySQL, JavaScript, jQuery, JSP, Julia, Ksh Shell, langage de programmation, Latex, Linux, Lisp, LOLCODE, Lua, machine virtuelle, Malbolge, Markdown, MathML, Matlab, Memcached, Mongo DB, Mozart-OZ, MySQL, Nimrod, Node.js, Numpy, Objective-C, OCaml, Octave, OneDrive, Oracle 11g, OS, P5.js, PARI/GP, Pascal, Pawn, Perl, Perl MySQL, PHP, PHP MySQL, PHP Web View, Pike, PowerShell, Processing.js, programmation, Prolog, Python, Python MySQL, Python-3, R Programming, Redis, reStructure, Rexx, Ruby, Rust, Scala, Scheme, Scipy, script, shell, Simula, Smalltalk, SML/NJ, SQLite, Sympy, système d'exploitation, Tcl, terminal, terminaux, Tex, Tutorials Point, TutorialsPoint, Unlambda, VB.Net, Verilog, VM, Whitespace, Ya Basic, Yasm |  Permalien

Permalien

Publié par endormitoire

Publié par endormitoire

3 avril 2017

We all know that guy. You know, that guy who sees performance improvements everywhere, all the time?

That programmer who squeezes everything into bitmaps because « it’s so much faster » ? And when I say everything, I mean everything! Even if your class only has one flag!

That guy who caches everything in the application because « that’s the optimal way to do it« ? Even if the data that is cached is just accessed only one time?

That guy who fits a date into an integer in the database because « it’s so much more compact » ? There goes all your SQL and date functions!

That developer who always ends up re-implementing another sorting algorithm because he read a « great paper » on the subject and « it’s proven that it’s 0.2% faster than the default sort » available? And after an insane debugging session, you finally realize he has overriden SortedCollection>>#sort: ? And that his code just doesn’t work properly!

You know, that guy with a C/C++ background who spends countless hours optimizing everything not realizing he has to « make it work » first! You know, that guy who still doesn’t get that often times you only need to optimize very small parts of an application to make a real difference?

You know that guy with strange concepts such as « defensive programming » who tells you nobody ever caught a bug in his code in production? You know, that guy who came up with the « clever » catch-everything method #ifTrue:ifFalse:ifNil:onError:otherwise: ?

You know that guy who never works for more than 4 months at the same place because « they didn’t get it, they’re a bunch of morons » ?

You know, that guy who prefers to implement complex database queries with a #do: loop and a gazillion SELECT statement because « SELECT statements with a 1-row lookup are very optimized by the database server » instead of using JOINs. And then he blames the slow response time of his « highly optimized » data retrieval code on the incompetence of the DBAs maintaining the database?

You know, that guy who once told me « inheritance in Smalltalk is very bad because the deeper the class in the hierarchy, the slower the method lookup is going to be » so that’s why he always preferred « flattened hierarchies » (meaning promoting ALL instance variables into one root class) with 2-3 levels deep in the worst case? « Besides, my code is easier to understand and debug since everything is in one place« .

Well, I was going through some of the sh*ttiest code I’ve seen in a long time last night and I remembered that guy and his ideas about « highly optimized flattened hierarchies » and thought I’d measure his theory! Here’s the script.

Basically, it creates a hierarchy of classes (10000 subclasses) and times message sends from the top class and the bottom class to measure the difference.

Well, that guy was right… There’s a 1 millisecond difference over 9 million message sends from the root class as compared to the 10000th class at the bottom of the hierarchy.

I wanted to tell that guy he was right but his email address at work is probably no longer valid…

CAVEAT: Make sure you do not have a category with the same name as the one in the script because the script removes the category at the end (and all classes in it!). Also, be warned it takes a very very very long time to execute!

P.S. All the stories above are real. No f*cking kidding! Those guys really existed : I’ve worked with them!

Un commentaire |

Un commentaire |  database, Smalltalk | Tagué: coding, database, horror, optimization, performance, Smalltalk |

database, Smalltalk | Tagué: coding, database, horror, optimization, performance, Smalltalk |  Permalien

Permalien

Publié par endormitoire

Publié par endormitoire

10 février 2017

I’ve been dealing a lot with bit operations lately. And doing lots of benchmarking, like here. As I was looking for a bit count method in Pharo (it used to be there but it no longer exists in Pharo 5.0), I got curious about the many different versions of bit counting algorithms I could find on the internet.

What’s so special about bit operations you ask? Not much. Except when you have to do it really fast on 64bit integers! Like in a chess program! Millions of times per position. So instead of copying the #bitCount method that was in Squeak, I decided I’d have a look at what is available on the net…

So I decided to share what I found. This could potentially be useful for people who have to deal with bit counting a lot. Especially if you deal with 14 bits or less!

Here’s a typical run of the different bit counting algorithms I have tested on Squeak 5.1 64bit.

Number of [myBitCount1 (128 bits)] per second: 0.061M

Number of [myBitCount1 (14 bits)] per second: 1.417M

Number of [myBitCount1 (16 bits)] per second: 1.271M

Number of [myBitCount1 (30 bits)] per second: 0.698M

Number of [myBitCount1 (32 bits)] per second: 0.651M

Number of [myBitCount1 (60 bits)] per second: 0.362M

Number of [myBitCount1 (64 bits)] per second: 0.131M

Number of [myBitCount1 (8 bits)] per second: 2.255M

Number of [myBitCount2 (128 bits)] per second: 0.286M

Number of [myBitCount2 (14 bits)] per second: 3.623M

Number of [myBitCount2 (16 bits)] per second: 3.630M

Number of [myBitCount2 (30 bits)] per second: 2.320M

Number of [myBitCount2 (32 bits)] per second: 2.336M

Number of [myBitCount2 (60 bits)] per second: 1.415M

Number of [myBitCount2 (64 bits)] per second: 1.208M

Number of [myBitCount2 (8 bits)] per second: 4.950M

Number of [myBitCount3 (128 bits)] per second: 0.498M

Number of [myBitCount3 (14 bits)] per second: 4.556M

Number of [myBitCount3 (16 bits)] per second: 4.673M

Number of [myBitCount3 (30 bits)] per second: 3.401M

Number of [myBitCount3 (32 bits)] per second: 3.401M

Number of [myBitCount3 (60 bits)] per second: 2.130M

Number of [myBitCount3 (64 bits)] per second: 1.674M

Number of [myBitCount3 (8 bits)] per second: 4.938M

Number of [myBitCount4 (128 bits)] per second: 0.041M

Number of [myBitCount4 (14 bits)] per second: 5.333M

Number of [myBitCount4 (16 bits)] per second: 4.819M

Number of [myBitCount4 (30 bits)] per second: 2.841M

Number of [myBitCount4 (32 bits)] per second: 2.674M

Number of [myBitCount4 (60 bits)] per second: 1.499M

Number of [myBitCount4 (64 bits)] per second: 0.270M

Number of [myBitCount4 (8 bits)] per second: 7.435M

Number of [myBitCount5 (128 bits)] per second: 0.377M

Number of [myBitCount5 (14 bits)] per second: 3.937M

Number of [myBitCount5 (16 bits)] per second: 3.035M

Number of [myBitCount5 (30 bits)] per second: 2.137M

Number of [myBitCount5 (32 bits)] per second: 2.035M

Number of [myBitCount5 (60 bits)] per second: 1.386M

Number of [myBitCount5 (64 bits)] per second: 1.188M

Number of [myBitCount5 (8 bits)] per second: 4.167M

Number of [myBitCount6 (128 bits)] per second: 0.381M

Number of [myBitCount6 (14 bits)] per second: 5.195M

Number of [myBitCount6 (16 bits)] per second: 3.552M

Number of [myBitCount6 (30 bits)] per second: 2.488M

Number of [myBitCount6 (32 bits)] per second: 2.364M

Number of [myBitCount6 (60 bits)] per second: 1.555M

Number of [myBitCount6 (64 bits)] per second: 1.284M

Number of [myBitCount6 (8 bits)] per second: 5.571M

Number of [myPopCount14bit (14 bits)] per second: 18.349M

Number of [myPopCount14bit (8 bits)] per second: 18.519M

Number of [myPopCount24bit (14 bits)] per second: 7.407M

Number of [myPopCount24bit (16 bits)] per second: 7.463M

Number of [myPopCount24bit (8 bits)] per second: 7.018M

Number of [myPopCount32bit (14 bits)] per second: 4.963M

Number of [myPopCount32bit (16 bits)] per second: 5.013M

Number of [myPopCount32bit (30 bits)] per second: 4.608M

Number of [myPopCount32bit (32 bits)] per second: 4.619M

Number of [myPopCount32bit (8 bits)] per second: 4.608M

Number of [myPopCount64a (14 bits)] per second: 2.778M

Number of [myPopCount64a (16 bits)] per second: 2.793M

Number of [myPopCount64a (30 bits)] per second: 2.751M

Number of [myPopCount64a (32 bits)] per second: 2.703M

Number of [myPopCount64a (60 bits)] per second: 2.809M

Number of [myPopCount64a (64 bits)] per second: 1.385M

Number of [myPopCount64a (8 bits)] per second: 2.755M

Number of [myPopCount64b (14 bits)] per second: 3.063M

Number of [myPopCount64b (16 bits)] per second: 3.096M

Number of [myPopCount64b (30 bits)] per second: 3.106M

Number of [myPopCount64b (32 bits)] per second: 3.053M

Number of [myPopCount64b (60 bits)] per second: 3.008M

Number of [myPopCount64b (64 bits)] per second: 1.444M

Number of [myPopCount64b (8 bits)] per second: 3.091M

Number of [myPopCount64c (14 bits)] per second: 1.625M

Number of [myPopCount64c (16 bits)] per second: 1.600M

Number of [myPopCount64c (30 bits)] per second: 1.542M

Number of [myPopCount64c (32 bits)] per second: 1.529M

Number of [myPopCount64c (60 bits)] per second: 1.566M

Number of [myPopCount64c (64 bits)] per second: 1.082M

Number of [myPopCount64c (8 bits)] per second: 3.945M

Now, since method #myBitCount2 is similar to the #bitCount method in Squeak, that means there is still place for improvement as far as a faster #bitCount is needed. Now the question is : do we optimize it for the usual usage (SmallInteger), for 64bit integer or we use an algorithm that performs relatively well in most cases? Obviously, since I will always be working with 64bit positive integers, I have the luxury to pick a method that precisely works best in my specific case!

All test code I have used can be found here.

Note: Rush fans have probably noticed the reference in the title…

Leave a Comment » |

Leave a Comment » |  optimisation, performance, Pharo, programming, Smalltalk, Squeak | Tagué: algorithms, bit count, bit counting, bit manipulation, bit operations, bitCount, integers, Pharo, Rush, Smalltalk, Squeak |

optimisation, performance, Pharo, programming, Smalltalk, Squeak | Tagué: algorithms, bit count, bit counting, bit manipulation, bit operations, bitCount, integers, Pharo, Rush, Smalltalk, Squeak |  Permalien

Permalien

Publié par endormitoire

Publié par endormitoire

10 février 2017

Often times, we take stuff for granted. But while preparing to embark on a crazy project (description in French here and Google translation in English here), I wanted to benchmark the bit manipulation operations in both Squeak and Pharo, for the 32bit and 64bit images (I am on Windows so the 64bit VM is not available for testing yet but it’ll come!). So essentially, it was just a test to compare the VM-Image-Environment combo!

To make a long story short, I was interested in testing the speed of 64bit operations on positive integers for my chess program. I quickly found some cases where LargePositiveInteger operations were more than 7-12 times slower than the SmallInteger equivalences and I became curious since it seemed like a lot. After more testing and discussions (both offline and online), someone suggested that some LargePositiveInteger operations could possibly be slow because they were not inlined in the JIT. It was then recommended that I override those methods in LargePositiveInteger (with primitives 34 to 37), thus shortcutting the default and slow methods in Integer (corresponding named primitives, primDigitBitAnd, primDigitBitOr, primDigitBitXor, primDigitBitShiftMagnitude in LargeIntegers module). I immediately got a 2-3x speedup for LargePositiveInteger but…

Things have obviously changed in the Squeak 64bit image since some original methods (in class Integer) like #bitAnd: and #bitOr: are way faster than the overrides (in class LargePositiveInteger )! Is it special code in the VM that checks for 32bit vs 64bit (more precisely, 30bit vs 60bit integers)? Is it in the LargeIntegers module?

Here are 2 typical runs for Squeak 5.1 32bit (by the way, Pharo 32bit image performs similarly) and Squeak 5.1 64bit images :

Squeak 5.1 32bit

Number of #allMask: per second: 7.637M

Number of #anyMask: per second: 8.333M

Number of #bitAnd: per second: 17.877M

Number of #bitAnd2: per second: 42.105M

Method #bitAnd2: seems to work properly! Overide of #bitAnd: in LargeInteger works!

Number of #bitAt: per second: 12.075M

Number of #bitAt:put: per second: 6.287M

Number of #bitClear: per second: 6.737M

Number of #bitInvert per second: 5.536M

Number of #bitOr: per second: 15.764M

Number of #bitOr2: per second: 34.409M

Method #bitOr2: seems to work properly! Overide of #bitOr: in LargeInteger works!

Method #bitShift2: (left & right shifts) seems to work properly! Overide of #bitShift: in LargeInteger works!

Number of #bitXor: per second: 15.385M

Number of #bitXor2: per second: 34.043M

Method #bitXor2: seems to work properly! Overide of #bitXor: in LargeInteger works!

Number of #highBit per second: 12.451M

Number of #<< per second: 6.517M

Number of #bitLeftShift2: per second: 8.399M

Number of #lowBit per second: 10.702M

Number of #noMask: per second: 7.064M

Number of #>> per second: 7.323M

Number of #bitRightShift2: per second: 29.358M

Squeak 5.1 64bit

Number of #allMask: per second: 36.782M

Number of #anyMask: per second: 41.026M

Number of #bitAnd: per second: 139.130M

Number of #bitAnd2: per second: 57.143M

Method #bitAnd2: seems to work properly! Overide of #bitAnd: in LargeInteger works!

Number of #bitAt: per second: 23.358M

Number of #bitAt:put: per second: 8.649M

Number of #bitClear: per second: 38.554M

Number of #bitInvert per second: 29.630M

Number of #bitOr: per second: 139.130M

Number of #bitOr2: per second: 58.182M

Method #bitOr2: seems to work properly! Overide of #bitOr: in LargeInteger works!

Method #bitShift2: (left & right shifts) seems to work properly! Overide of #bitShift: in LargeInteger works!

Number of #bitXor: per second: 55.172M

Number of #bitXor2: per second: 74.419M

Method #bitXor2: seems to work properly! Overide of #bitXor: in LargeInteger works!

Number of #highBit per second: 7.921M

Number of #<< per second: 10.127M

Number of #bitLeftShift2: per second: 12.800M

Number of #lowBit per second: 6.823M

Number of #noMask: per second: 39.024M

Number of #>> per second: 23.188M

Number of #bitRightShift2: per second: 56.140M

So now, I’m left with 2 questions :

- Why exactly does the override work (in 32bit images)?

- What changed so that things are different in Squeak 5.1 64bit image (overrides partially work)?

If you’re curious/interested, the code I have used to test is here.

Leave me a comment (or email) if you have an explanation!

To be continued…

Leave a Comment » |

Leave a Comment » |  Cog, informatique, Machine virtuelle, optimisation, performance, Pharo, programmation, programming, Smalltalk, Squeak, VM, Windows | Tagué: #bitAnd:, #bitOr:, #bitShift:, #bitXor:, 32bit, 64bit, bit, bit manipulation, bit operations, image, integer, LargeIntegers, LargePositiveInteger, module, named primitive, primDigitBitAnd, primDigitBitOr, primDigitBitShiftMagnitude, primDigitBitXor, primitive, SmallInteger |

Cog, informatique, Machine virtuelle, optimisation, performance, Pharo, programmation, programming, Smalltalk, Squeak, VM, Windows | Tagué: #bitAnd:, #bitOr:, #bitShift:, #bitXor:, 32bit, 64bit, bit, bit manipulation, bit operations, image, integer, LargeIntegers, LargePositiveInteger, module, named primitive, primDigitBitAnd, primDigitBitOr, primDigitBitShiftMagnitude, primDigitBitXor, primitive, SmallInteger |  Permalien

Permalien

Publié par endormitoire

Publié par endormitoire

18 août 2016

Need a quick and simple way to obfuscate integer ids ? Try Hashids ! A useful tool to transform integers into encoded strings.

As of now, it’s been implemented in 36 programming languages! And yes, you guessed it, also in Smalltalk (in Pharo but can easily be ported to any other Smalltalk flavor)!

Save

Leave a Comment » |

Leave a Comment » |  programmation, programming | Tagué: encode, hashids, integer, Pharo, Smalltalk |

programmation, programming | Tagué: encode, hashids, integer, Pharo, Smalltalk |  Permalien

Permalien

Publié par endormitoire

Publié par endormitoire

18 août 2016

If you’re like everyone else, you think regular expressions suck but sometimes you got no choice other than to use them because that’s the best tool for the job…

Here comes Rubular to the rescue! A nice and easy-to-use regex editor that allows you to test your expressions on the fly!

And if you need to know more about regular expressions, there’s the excellent free book Regular Expression Recipes for Windows Developers, A Problem-Solution Approach.

Save

Save

Leave a Comment » |

Leave a Comment » |  programmation, programming | Tagué: recipes, regex, regular expressions, rubular |

programmation, programming | Tagué: recipes, regex, regular expressions, rubular |  Permalien

Permalien

Publié par endormitoire

Publié par endormitoire

Publié par endormitoire

Publié par endormitoire